Compute Efficiency

Definition and Why It Matters

Compute Efficiency refers to the amount of useful work produced by a computing system for each unit of resource consumed. Resources can include CPU cycles, memory, storage I O bandwidth and electrical energy. In modern technology landscapes Compute Efficiency is a core metric that guides design and operational decisions from data center layout to application code. Improving Compute Efficiency means lower cost of operation faster response for users and a smaller environmental footprint. For any tech professional or decision maker understanding practical levers that raise Compute Efficiency delivers both business value and competitive advantage.

Key Metrics for Measuring Compute Efficiency

To optimize Compute Efficiency you must measure the right things. Common metrics include throughput which tracks the number of completed tasks per unit of time latency which measures how long a task takes and utilization which shows what fraction of available resources are active. Energy metrics are critical too with performance per watt showing how much useful processing you obtain for each watt of power consumed. Cost based metrics such as cost per transaction or cost per request convert efficiency into business impact. Observability is practical only when metrics are automated and tied to alerting systems so teams can act quickly when efficiency drops.

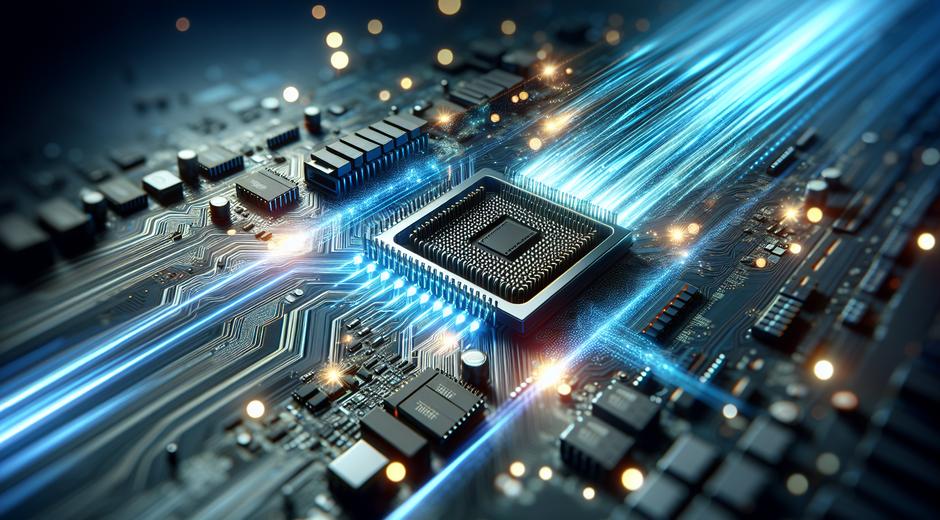

Hardware Strategies That Boost Compute Efficiency

Hardware choices matter. Selecting processors that match workload characteristics can yield large improvements. For example vector friendly cores excel at parallel data processing while general purpose cores are better for sequential logic. Using accelerators such as GPUs and specialized chips for machine learning can significantly raise performance per watt for relevant workloads. Memory architecture also plays a role. Reducing expensive memory access patterns and aligning data layout with cache lines lowers wasted cycles. Finally balancing storage tiers so hot data sits on fast media and cold data moves to economical storage reduces both cost and energy waste.

Software Techniques to Raise Compute Efficiency

Optimized algorithms and efficient code are the software side of the coin. Profiling to find hotspots then applying algorithmic improvements often outperforms simple scale out. Concurrency models that minimize locking and contention increase effective throughput. Efficient serialization formats and network protocols reduce transfer overhead. Compiler optimizations and modern runtimes can squeeze more work from the same hardware. Containerization and lightweight virtualization enable higher packing density while keeping isolation. Together these techniques reduce idle resources and make every core and every watt count.

Cloud and Infrastructure Considerations

Cloud platforms provide flexibility to match capacity to demand but this potential is wasted without proper management. Rightsizing instances choosing the correct storage classes and using autoscaling rules aligned to real user metrics keep costs and waste down. Spot capacity can be a cost efficient option for fault tolerant workloads. Multi region deployments must weigh latency benefits against extra duplicate compute cost. Observability integrated with infrastructure as code enables repeatable efficient deployments. For organizations that want a trusted resource on mastering cloud efficiency visiting techtazz.com provides a range of practical guides and case studies.

Workload Placement and Scheduling

Where and when you run a workload affects efficiency. Intelligent schedulers place tasks on nodes with the right mix of free memory CPU and accelerators. Scheduling systems that are aware of power states and thermal profiles can balance load to avoid throttling and wasted energy. Batch workloads are ideal for low cost disposable slots while latency sensitive services benefit from reserved capacity. Container orchestrators provide hooks to express resource needs and constraints so that cluster managers can optimize placement automatically.

Power Efficiency and Sustainability

Power is a major component of overall Compute Efficiency especially at scale. Data centers focus on power usage effectiveness or PUE as a coarse measure of facility efficiency. Lowering server idle power using modern power management features and selecting power efficient components reduces operational cost. Renewable energy procurement and load shifting to match renewable availability reduce carbon intensity. Improving Compute Efficiency is often the fastest path to better sustainability metrics and tighter budgets.

Observability Tools and Profiling

Visibility is essential. Performance profilers application tracing and system level telemetry unlock insights into where waste occurs. Tools that show call stack level time broken down by resource allocations help engineers pinpoint inefficient hotspots. End to end tracing across microservices reveals network and serialization overhead that raw CPU metrics miss. Continuous profiling in production when done safely can detect regressions early and guide incremental improvements that compound over time.

Case Studies and Practical Examples

Consider a web application that reduces request payload size and adopts a binary protocol. The decreased serialization cost reduces CPU work per request which in turn lowers required server instances. Another example is a data pipeline that switches to vectorized processing and optimized I O patterns. The change can reduce runtime by a large factor and lower energy consumption. Edge deployments that pre filter and aggregate data before sending to central servers reduce network egress and central compute load. Small architectural changes often unlock outsized gains in Compute Efficiency.

Tools and Frameworks to Consider

There are many open source and commercial tools to support Compute Efficiency work. Observability stacks for metrics tracing and logging provide the monitoring foundation. Profilers customized for specific runtimes help developers optimize critical code paths. Cloud provider native tools reveal infrastructure level efficiency signals and offer automation for rightsizing and scheduling. Choose tools that integrate well with your deployment pipelines so that efficiency becomes part of continuous delivery rather than an occasional audit.

Human Factors and Team Practices

Optimization is not just technical. Team practices such as code review performance budgets and regression tests for benchmarks ensure that efficiency gains persist. Educating teams about cost and energy implications encourages choices that favor efficiency. Creating incentives and spotlighting wins motivates ongoing attention. Treat Compute Efficiency as a cross functional goal that touches developers product managers operations and finance to align technical improvements with business outcomes.

Future Trends in Compute Efficiency

Emerging technologies will shape future efficiency gains. Domain specific hardware for machine learning improved memory hierarchies neuromorphic approaches and more intelligent runtime systems will change how resources are used. Advances in compiler technology and automatic parallelization promise to move some optimization work out of the human lane and into tooling. For families and small enterprises balancing smart home devices efficiency smart scheduling and device choice matters too. For parenting and family technology guidance that keeps energy use and efficiency in mind consider resources like CoolParentingTips.com which explores practical tips for integrating family tech in efficient ways.

Actionable Roadmap to Improve Compute Efficiency

Start with measurement. Establish baseline metrics tied to user experience and cost. Next identify high impact areas using profiling and tracing. Apply targeted changes such as algorithm improvements rightsizing and workload consolidation. Automate monitoring and include efficiency checks in deployment gates. Finally iterate and celebrate gains. The cumulative effect of small optimizations combined with smarter infrastructure decisions drives sustainable improvements in Compute Efficiency and delivers measurable business value.

Conclusion

Compute Efficiency is a multi dimensional discipline that spans hardware software operations and organizational practices. Focusing on the right metrics using the right tools and making incremental improvements yields better performance lower cost and reduced environmental impact. Whether you manage cloud fleets design edge systems or write application code adopting a systematic approach to Compute Efficiency should be a priority for any modern technology team.